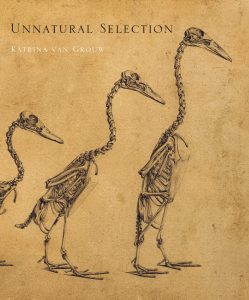

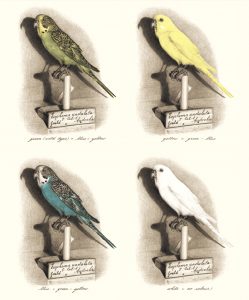

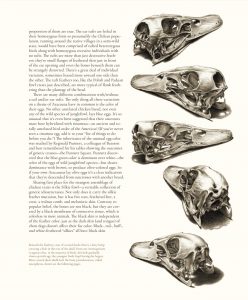

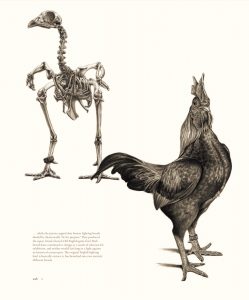

After her incredibly successful book The Unfeathered Bird, Katrina van Grouw has recently finished Unnatural Selection, a beautiful combination of art, science and history. In this book, she celebrates the rapid changes breeders can bring about in domesticated animals. This was a topic of great interest to Charles Darwin, and it is no coincidence that Unnatural Selection is published on the 150th anniversary of Darwin’s book The Variation of Animals and Plants under Domestication.

In this post we talk with Katrina about her background, the work that goes into making a book and plans for the future

![]()

On your website, you write that you always had an interest in natural history, but that your talent in drawing made your teachers push you to pursue an art career, rather than studying biology. Did you ever consider a career as a scientific illustrator, something for which there must have been more of a market back then than there is now? If not, when and how did you decide to start combining your passion for biology with your talent as an artist?

On your website, you write that you always had an interest in natural history, but that your talent in drawing made your teachers push you to pursue an art career, rather than studying biology. Did you ever consider a career as a scientific illustrator, something for which there must have been more of a market back then than there is now? If not, when and how did you decide to start combining your passion for biology with your talent as an artist?

No, I didn’t. There are several reasons for this; some a result of indoctrination, and others, decisions of my own.

There were two revelatory moments which brought art and science together for me and set the path for what was to come. Once when I’d rejected art and was sliding down a greasy pole into oblivion. And another, when I was an art student seeking direction. The first was at a zoo, and the second at a museum, and both were as vivid as a flash of light from the sky.

Even with art and natural history combined in my work, however, it was always in a fine art sense and never as an illustrator. I still don’t really identify with the term. Being an illustrator usually involves working to someone else’s brief and taking instructions from a non-illustrator about how the work should be done. I’m too self-obsessed for that! I lack imagination when it comes to commissioned work and can’t seem to generate much passion for other people’s projects, though I have the greatest respect for people who can do these things. I’m basically just no good at it!

You worked as curator of the bird skin collection of the London Natural History Museum. How did you end up there after an art degree?

You worked as curator of the bird skin collection of the London Natural History Museum. How did you end up there after an art degree?

How I ended up there is quite a long story. I do have a degree in art (two actually) but I also spent many years gaining valuable skills in practical ornithology that were precisely what the NHM needed; a combination of skills that was lacking in all the other applicants for the post.

I’d taught myself to prepare study skins and was good at it. I knew my way around the inside of a bird and had written a Masters’ thesis on bird anatomy (albeit aimed at artists). I was a qualified ringer who’d held an A class ringing permit for many years, which meant that I could age and sex birds accurately and knew how to take precise measurements consistent with other field workers. I’d taken part in ornithological expeditions in Africa and South America, so I had some first-hand experience of non-European birds. I’d worked in other museums. And I was a birder.

It’s a sad fact that one’s education often defines how a person is categorised for the remainder of their life, but self-taught skills, and hands-on experience can be worth far, far more. People often assume that artists can only ‘do art’ and nothing more, and that only people with a science degree are able to ‘do science’. A great many people are able to do both (though fewer questions are raised when it’s a qualified scientist who turns his/her hand to art!)

Your previous book, The Unfeathered Bird, took some 25 years from conception to publication, mostly as you found it very difficult to find a publisher. How did you manage to convince Princeton University Press to publish this book after so many rejections?

Your previous book, The Unfeathered Bird, took some 25 years from conception to publication, mostly as you found it very difficult to find a publisher. How did you manage to convince Princeton University Press to publish this book after so many rejections?

The quick answer is, because Princeton University Press is a publisher of vision and wisdom! (And no, they didn’t pay me to say that).

The full story is that the majority of publishers I approached had entrenched preconceptions about what an anatomy book should be and were unable to envisage anything that wasn’t a highly academic technical manual aimed at a niche audience. The book I had in mind was geared toward a much broader spectrum of bird lovers, including and especially bird artists. Additionally, I wanted it to be beautifully produced and aesthetically pleasing. So it wasn’t so much a problem of not being able to find a publisher, but not being able to find a publisher willing to think outside of the box. To answer the question: I didn’t actually need to convince Princeton –a fortuitous meeting lead to a great collaboration.

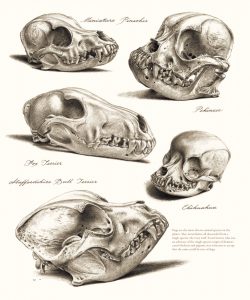

Your response to critics of breeding has been to counter their objection by saying “look at what nature has done to the sword-billed hummingbird!” which I thought was a sharp response. However, an animal welfare advocate might counter this argument by pointing out that natural selection can only push sword-billed hummingbirds so far. If this adaptation – the extension of the bill to retrieve nectar from ever deeper flower corollas – becomes maladaptive it will be selected against. Breeders, however, can select for traits that are maladaptive, because these animals grow up in an artificial environment where they are relieved of the pressures of natural selection. The shortened snouts and breathing problems of short-nosed dog breeds such as boxers come to mind. Obviously, if these traits become too extreme, the animals will not survive until reproductive age, but we can push them into a zone of discomfort and suffering through artificial breeding. What would your response to this be?

Your response to critics of breeding has been to counter their objection by saying “look at what nature has done to the sword-billed hummingbird!” which I thought was a sharp response. However, an animal welfare advocate might counter this argument by pointing out that natural selection can only push sword-billed hummingbirds so far. If this adaptation – the extension of the bill to retrieve nectar from ever deeper flower corollas – becomes maladaptive it will be selected against. Breeders, however, can select for traits that are maladaptive, because these animals grow up in an artificial environment where they are relieved of the pressures of natural selection. The shortened snouts and breathing problems of short-nosed dog breeds such as boxers come to mind. Obviously, if these traits become too extreme, the animals will not survive until reproductive age, but we can push them into a zone of discomfort and suffering through artificial breeding. What would your response to this be?

I’m an animal welfare advocate too. It’s difficult not to be when you keep animals and care for them every day. I too will freely admit that there are exhibition breeds in which artificial selection appears to have gone too far, resulting in health problems or discomfort. I can also appreciate that these problems might have their roots deeply embedded in history and culture and might be difficult to rectify without tearing down systems that would have devastating consequences to the entire fancy.

(Incidentally, the suffering of poultry selectively bred for the commercial meat industry is on a scale many thousands of times greater than the relatively low numbers of extreme pedigree breeds.)

The process of selecting out these physical defects will be a slow one and I think it’s important to support the work of breeders in this task. We can support them by trying to understand more about their world and by ceasing to attack them in gutter-press fashion with pseudo-scientific terms we don’t fully understand.

My book Unnatural Selection isn’t intended to voice personal opinions about animal welfare however. As the title suggests, it’s a book about evolution, based on and elaborating on the analogy that Darwin made between natural and artificial selection. For that reason I’ve discussed selective breeding solely within this evolutionary and historical context. It’s not that I was deliberately avoiding welfare issues; they simply weren’t relevant to the points I was discussing.

You write that the work on Unnatural Selection took six years of full-time work, around the clock. How long do you typically take to complete an illustration? And how do you manage to support yourself during this period, do you have freelance illustrations assignments on the side?

You write that the work on Unnatural Selection took six years of full-time work, around the clock. How long do you typically take to complete an illustration? And how do you manage to support yourself during this period, do you have freelance illustrations assignments on the side?

If I’m in-practice I can usually complete a full-page illustration in two or three days. There are 425 illustrations in Unnatural Selection, not forgetting the 84,000 words of text (somehow people always forget the text…). Not to mention thousands of hours’ research and background reading. Working like this is all-consuming, and definitely very unhealthy.

The fact is that non-fiction books taking so long to produce will never, ever, pay for themselves. Luckily Husband works full time, so we don’t actually starve, though I’d prefer to be able to contribute more financially to the household.

I would love to supplement my books with a part time job, but it certainly wouldn’t be illustrating! I dislike illustrating for other authors. I actually get far more pleasure from writing and I’m equally good at it, though this side of me is unfortunately often eclipsed by the artwork.

People talk in airy-fairy terms about the freedom and personal reward of being an artist transcending material gain, but it’s not like that at all. It’s not the actual poverty that’s damaging, but the feeling of inadequacy you get from working so hard, with such integrity, for so long, yet making no money.

The things that make it worthwhile are making those books exist at the end of it all, and having people tell me how grateful they are.

With two books now published by Princeton University Press, you seem to have started a very successful collaboration. How has the reception of this book been so far? Have you received nominations for prizes?

With two books now published by Princeton University Press, you seem to have started a very successful collaboration. How has the reception of this book been so far? Have you received nominations for prizes?

Boy, I’d love to win a prize! It’s still early days yet, so I’m ever-hopeful. To be honest though, I suspect I’m not the sort of person who wins prizes. Prizes seem to be dished out to academics and people whose career has been rather more conventional than mine. Like my books, I rather defy taxonomy and, even though we communicate science exceedingly well, few institutions would be brave enough to award a science writing prize to a self-taught scientist.

That’s not to say that we’re unpopular; quite the opposite. I’m proud to say that The Unfeathered Bird was embraced by a huge range of people: birders, naturalists, painters, sculptors, taxidermists, poets, mask makers, puppeteers, aviators, falconers, bibliophiles, palaeontologists, zookeepers, creature-designers and animatronics-people, academic biologists and vets! The pictures have been used in a trendy Berlin cocktail bar, on Diesel t-shirts, and tattooed onto several people’s bodies, and I get very genuine letters of thanks from all manner of people, from university professors and 12-year old boys and girls.

Unnatural Selection is a far better book than The Unfeathered Bird. It has better art and better science and, unlike The Unfeathered Bird in which the images take the lead, Unnatural Selection is very much led by good scientific and historical text, with the images serving solely to illuminate and enhance what’s being said. Everyone who’s seen it so far says it’s stunning, and the reviews have all been excellent. I hope the scientific community will take it seriously and not dismiss it as merely a quaint and witty book with good pictures. It’s so much more than that.

Will you continue to work on more books in the future? And are you already willing to reveal what you are working on next?

Will you continue to work on more books in the future? And are you already willing to reveal what you are working on next?

In answer to your first question: definitely—though if you’d asked me that toward the end of The Unfeathered Bird I would probably have said no. That book was supposed to have been a one-off, and I’d been looking forward to resuming work as an artist afterwards. However, when the time came I found that I’d moved on. Producing pictures for their own sake no longer ‘did it for me’. Books, on the other hand tick all the boxes: creatively, intellectually; at every level.

It’s important to understand that these are not ‘art books’—they’re not collections of artwork made into a book. The book itself is the work of art, not the individual illustrations. They’re science books nevertheless. For me the challenge is communicating science in the best possible way and finding just the right unique angle for each book. I’m especially proud of Unnatural Selection which I think is the finest and most original thing I’ve ever created.

Unfortunately, large illustrated books take many years to produce so I probably won’t have sufficient time left to bring more than two or maybe three more into existence. After all, I’m no spring chicken.

I’ve already signed a contract with Princeton and begun work on a greatly expanded second edition of The Unfeathered Bird. The new book will have 400 pages (that’s 96 more than the first edition) and will include a lot of new material on bird evolution from feathered dinosaurs (which of course will be unfeathered feathered dinosaurs, if you see what I mean). There’ll be lots of new and replacement illustrations and the text will be completely re-written. The science will be better, but the book will still accessible to anyone and devoid of jargon. However, this shouldn’t put people off buying the original version—the new edition will be virtually a different book.

I’m also intending to write an autobiography/memoir type book focusing on the relationship between art, science, and illustration, and will be looking for a publisher for that. This won’t be illustrated though, so it would be a comparatively quick one!

![]()

Unnatural Selection has been published in June 2018 and is currently on offer for £26.99 (RRP £34.99).

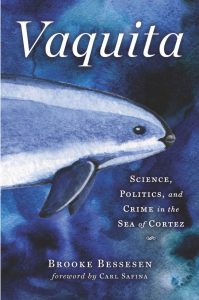

The vaquita entered the collective imagination (or at least, my imagination) when it became world news somewhere in 2017 and there was talk of trying to catch the last remaining individuals, something which you describe at the end of your story. Going back to the beginning though, how did you cross the path of this little porpoise?

The vaquita entered the collective imagination (or at least, my imagination) when it became world news somewhere in 2017 and there was talk of trying to catch the last remaining individuals, something which you describe at the end of your story. Going back to the beginning though, how did you cross the path of this little porpoise? Once your investigation on the ground in Mexico gets going, tensions quickly run high. This is where conservation clashes with the hard reality of humans trying to make a living. Corruption, intimidation and threats are not uncommon. Was there ever a point that you were close to pulling out because the situation became too dangerous?

Once your investigation on the ground in Mexico gets going, tensions quickly run high. This is where conservation clashes with the hard reality of humans trying to make a living. Corruption, intimidation and threats are not uncommon. Was there ever a point that you were close to pulling out because the situation became too dangerous?  As the story progresses, more and more foreign interests enter this story. Sea Shepherd starts patrolling the waters, and Leonardo DiCaprio also gets involved, signing a memorandum of understanding with the Mexican president to try and turn the tide. What was the reaction of Mexicans on the ground to this kind of foreign involvement? Are we seen as sentimental, spoiled, rich Westerners who can afford unrealistic attitudes?

As the story progresses, more and more foreign interests enter this story. Sea Shepherd starts patrolling the waters, and Leonardo DiCaprio also gets involved, signing a memorandum of understanding with the Mexican president to try and turn the tide. What was the reaction of Mexicans on the ground to this kind of foreign involvement? Are we seen as sentimental, spoiled, rich Westerners who can afford unrealistic attitudes?  One side of the story I found missing from your book was that of the demand for totoaba swim bladders in China. I imagine it might have been too dangerous or time-consuming (or both) to expand your investigation to China as well. How important and how feasible do you think it is to tackle the problem from that side? Without a demand for totoaba bladders, the vaquita wouldn’t face the threats of gillnets after all.

One side of the story I found missing from your book was that of the demand for totoaba swim bladders in China. I imagine it might have been too dangerous or time-consuming (or both) to expand your investigation to China as well. How important and how feasible do you think it is to tackle the problem from that side? Without a demand for totoaba bladders, the vaquita wouldn’t face the threats of gillnets after all.

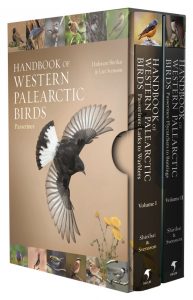

Lars: Let us say that in my case only 15 of these 18 years were spent on the new handbook. I was involved in doing the second edition of the

Lars: Let us say that in my case only 15 of these 18 years were spent on the new handbook. I was involved in doing the second edition of the  Hadoram: At least as I see it, BWP was made some 20-30 years ago when it was still possible to squeeze information into one project – on distribution, population estimates, atlas, ecology, seasonal biology, behaviour, voice, and so on, as well as identification and variation (but with generally only very basic illustrations, paintings) – and to be satisfied with it!

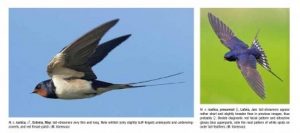

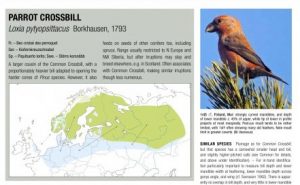

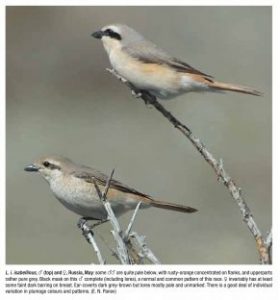

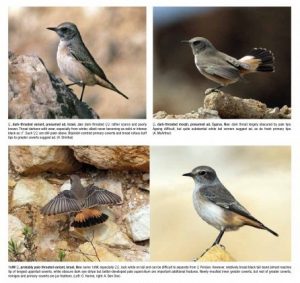

Hadoram: At least as I see it, BWP was made some 20-30 years ago when it was still possible to squeeze information into one project – on distribution, population estimates, atlas, ecology, seasonal biology, behaviour, voice, and so on, as well as identification and variation (but with generally only very basic illustrations, paintings) – and to be satisfied with it! Lars: To assemble the many photographs (there are well over 4000 photographs together in the two passerine volumes) and to achieve full coverage of male and female, young and adult, sometimes also in both spring and autumn, plus examples of distinct subspecies, was of course a major challenge. Hadoram was in charge of this and did a great job, and we got good support from our publisher setting up a home page for the project where photographers could see what we still needed photographs of. Thanks to the prolonged production, many gaps (if not all!) were filled during our course.

Lars: To assemble the many photographs (there are well over 4000 photographs together in the two passerine volumes) and to achieve full coverage of male and female, young and adult, sometimes also in both spring and autumn, plus examples of distinct subspecies, was of course a major challenge. Hadoram was in charge of this and did a great job, and we got good support from our publisher setting up a home page for the project where photographers could see what we still needed photographs of. Thanks to the prolonged production, many gaps (if not all!) were filled during our course. Hadoram: Lars described in his answers very well, the combined efforts with the museum collections work and building the photographic collections and selections for the project.

Hadoram: Lars described in his answers very well, the combined efforts with the museum collections work and building the photographic collections and selections for the project. Lars: Digital photography and the existence of internet and websites for documentation and discussion of bird images are the main changes compared to when I started as a birdwatcher. This has speeded up the exchange of news and knowledge in a remarkable way. True, field-guides and birding journals keep offering better and better advice on tricky identification problems, and optical aids have developed to a much higher standard than say 50 years ago. But the new cameras with stabilised lenses and autofocus have meant a lot and have enabled so-called ‘ordinary’ birders to take excellent photographs. This has broadened the cadre of photographers and multiplied the production of top class images of previously rarely photographed species. Which of course made

Lars: Digital photography and the existence of internet and websites for documentation and discussion of bird images are the main changes compared to when I started as a birdwatcher. This has speeded up the exchange of news and knowledge in a remarkable way. True, field-guides and birding journals keep offering better and better advice on tricky identification problems, and optical aids have developed to a much higher standard than say 50 years ago. But the new cameras with stabilised lenses and autofocus have meant a lot and have enabled so-called ‘ordinary’ birders to take excellent photographs. This has broadened the cadre of photographers and multiplied the production of top class images of previously rarely photographed species. Which of course made  Lars: I have planned for many years now to revise my

Lars: I have planned for many years now to revise my

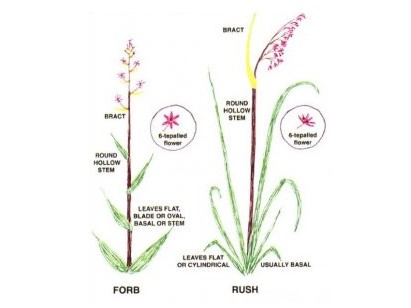

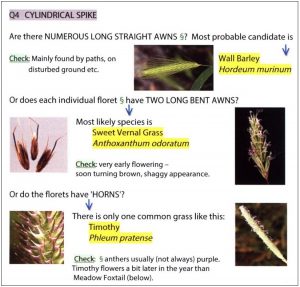

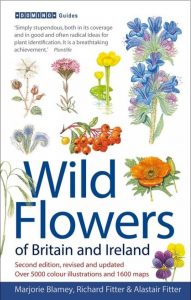

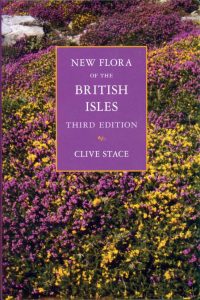

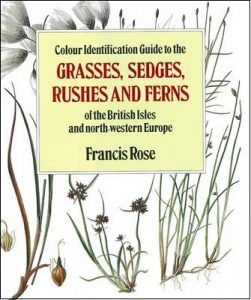

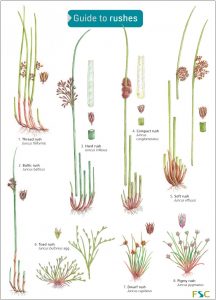

My books are not field guides at all. A field guide gives you a list of plant names, with pictures and descriptions, sometimes with a brief introduction. My books are all introduction: to field botany in general, to plant families and to grasses (maybe sedges and rushes next year . . .). A beginner armed only with a field guide has either to work their way from scratch through complicated keys, or to play snap: plant in one hand, book in the other; turn the pages until you find a picture that matches – ‘snap!’ By contrast, my books lead you into plant identification by logical routes, showing you where to look and what to look for. Their aim is to show you how to do ID for yourself.

My books are not field guides at all. A field guide gives you a list of plant names, with pictures and descriptions, sometimes with a brief introduction. My books are all introduction: to field botany in general, to plant families and to grasses (maybe sedges and rushes next year . . .). A beginner armed only with a field guide has either to work their way from scratch through complicated keys, or to play snap: plant in one hand, book in the other; turn the pages until you find a picture that matches – ‘snap!’ By contrast, my books lead you into plant identification by logical routes, showing you where to look and what to look for. Their aim is to show you how to do ID for yourself.

If you possibly can, go on a workshop for a day, a weekend or even a whole week. Having a real live person giving enthusiastic teaching, someone to answer all your questions and fresh plants to study is the best thing you can do. Look for a local botany or natural history group you can join, and go on their field meetings. When you get even a little more serious about your study, join Plantlife and/or the Botanical Society of Britain & Ireland. Identiplant is a very good online course but it is not really suitable for absolute beginners. Be a bit careful with apps and websites: some are incredibly complex, some seem to be aimed at five-year-olds, others are just inaccurate and misleading. The best ID websites I am aware of are run by the

If you possibly can, go on a workshop for a day, a weekend or even a whole week. Having a real live person giving enthusiastic teaching, someone to answer all your questions and fresh plants to study is the best thing you can do. Look for a local botany or natural history group you can join, and go on their field meetings. When you get even a little more serious about your study, join Plantlife and/or the Botanical Society of Britain & Ireland. Identiplant is a very good online course but it is not really suitable for absolute beginners. Be a bit careful with apps and websites: some are incredibly complex, some seem to be aimed at five-year-olds, others are just inaccurate and misleading. The best ID websites I am aware of are run by the  The main threat is not people picking a few to study, or even simply to enjoy at home. Climate change is, of course, a threat in one sense, but I believe we shouldn’t necessarily dread the rise of ‘aliens’ from warmer climates which are now able to establish themselves here. Every plant in Britain was once an alien, after the last Ice Age ended, and I would rather learn to live with change than blindly try to turn the clock back. There may be a few exceptions like Japanese Knotweed, but their evils are often exaggerated and some natives like bracken can be equally invasive. The real problem we face is habitat loss: to house-building and industry, land drainage, vast monocultural fields without headlands, destruction of ancient forests and so on. And this is an area where watchfulness and action really can make a difference.

The main threat is not people picking a few to study, or even simply to enjoy at home. Climate change is, of course, a threat in one sense, but I believe we shouldn’t necessarily dread the rise of ‘aliens’ from warmer climates which are now able to establish themselves here. Every plant in Britain was once an alien, after the last Ice Age ended, and I would rather learn to live with change than blindly try to turn the clock back. There may be a few exceptions like Japanese Knotweed, but their evils are often exaggerated and some natives like bracken can be equally invasive. The real problem we face is habitat loss: to house-building and industry, land drainage, vast monocultural fields without headlands, destruction of ancient forests and so on. And this is an area where watchfulness and action really can make a difference.

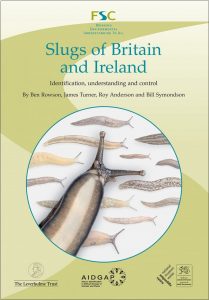

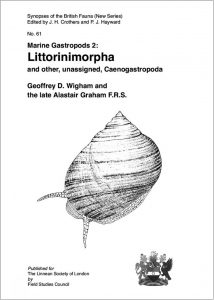

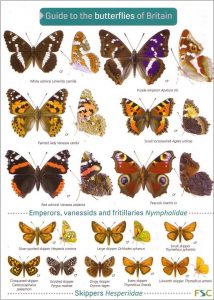

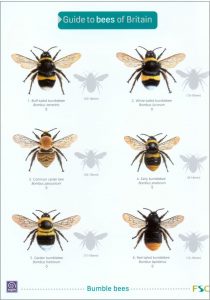

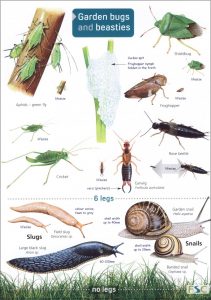

The Field Studies Council (FSC) is the NHBS Publisher of the Month for June.

The Field Studies Council (FSC) is the NHBS Publisher of the Month for June.

Mary Colwell is an award-winning writer and producer who is well-known for her work with BBC Radio producing programmes on natural history and environmental issues; including their Natural Histories, Shared Planet and Saving Species series.

Mary Colwell is an award-winning writer and producer who is well-known for her work with BBC Radio producing programmes on natural history and environmental issues; including their Natural Histories, Shared Planet and Saving Species series.

The unique features of churchyards mean that they offer a valuable niche for many species. Enclosed churchyard in particular provide a time-capsule and a window into the components of an ancient British landscape. Well known botanist, mycologist and broadcaster Stefan Buczacki has written

The unique features of churchyards mean that they offer a valuable niche for many species. Enclosed churchyard in particular provide a time-capsule and a window into the components of an ancient British landscape. Well known botanist, mycologist and broadcaster Stefan Buczacki has written

Managing a cemetery in a way that keeps everyone happy seems an impossible job. Last August I was photographing a meadow that had sprung up at a cemetery, when another photographer mentioned how disgusting it was. I was slightly bemused until the man explained he was a town councillor and was disgusted that the cemetery was unmaintained – “an insult to the dead” was how he described it – I thought it looked fantastic! whatever your opinion, how can we achieve common-ground between such diametrically opposed views?

Managing a cemetery in a way that keeps everyone happy seems an impossible job. Last August I was photographing a meadow that had sprung up at a cemetery, when another photographer mentioned how disgusting it was. I was slightly bemused until the man explained he was a town councillor and was disgusted that the cemetery was unmaintained – “an insult to the dead” was how he described it – I thought it looked fantastic! whatever your opinion, how can we achieve common-ground between such diametrically opposed views?

All the significant flora and fauna of churchyards their own chapters or sections; from fungi, lichen and plants, to birds, reptiles, amphibians and mammals? Which class, order or even species do you think has the closest association with churchyards and therefore the most to gain or lose from churchyard’s future conservation status?

All the significant flora and fauna of churchyards their own chapters or sections; from fungi, lichen and plants, to birds, reptiles, amphibians and mammals? Which class, order or even species do you think has the closest association with churchyards and therefore the most to gain or lose from churchyard’s future conservation status? With church attendance declining and the future of churchyard maintenance an increasingly secular concern; could you give a brief first-steps outline as to how an individual or a group might set about conserving and even improving the natural history of their local churchyard?

With church attendance declining and the future of churchyard maintenance an increasingly secular concern; could you give a brief first-steps outline as to how an individual or a group might set about conserving and even improving the natural history of their local churchyard? If someone, or a group become custodians of a churchyard what five key actions or augmentations would you most recommend and what two actions would you recommend against?

If someone, or a group become custodians of a churchyard what five key actions or augmentations would you most recommend and what two actions would you recommend against?